Join Fox News for access to this content

You have reached your maximum number of articles. Log in or create an account FREE of charge to continue reading.

By entering your email and pushing continue, you are agreeing to Fox News’ Terms of Use and Privacy Policy, which includes our Notice of Financial Incentive.

Please enter a valid email address.

Amanda Knox was behind bars when she befriended a Catholic priest.

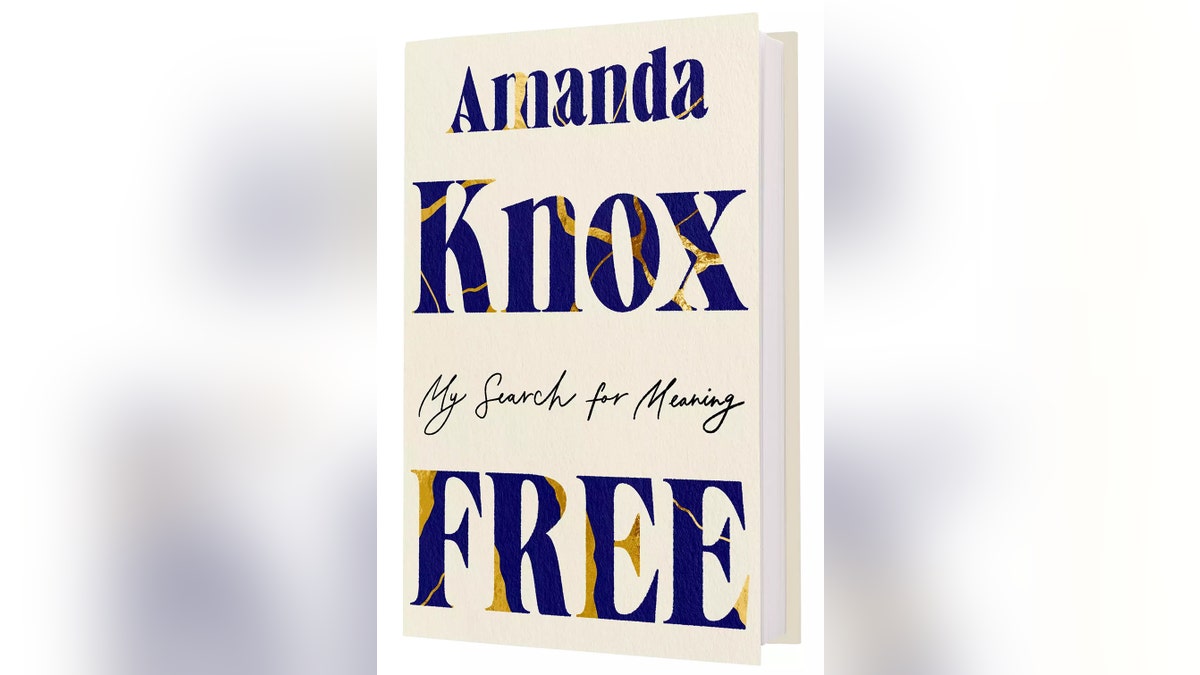

The mother of two, who spent nearly four years in an Italian prison, has written a new book, “Free: My Search for Meaning.” It recounts the struggles the 37-year-old endured in attempting to reintegrate into society. Knox also reflects on what it was like returning to a more normal life, including seeking a life partner, finding a job and walking out in public.

The Seattle native, who identifies as an atheist, told Fox News Digital prison chaplain Don Saulo not only became her best friend during those years but also gave her hope when she felt hopeless.

AMANDA KNOX GIVES WARNING TO STUDENTS WANTING TO STUDY ABROAD, 10 YEARS AFTER BEING ACQUITTED OF MURDER

In her new book, “Free: My Search for Meaning,” Amanda Knox described how a priest was willing to be her friend while she was behind bars. (Stephen Brashear/Getty Images)

“He was a good man, a friend and a philosopher,” Knox told Fox News Digital. “He was the family who was there for me in prison when the rest of my family couldn’t be physically there with me. And he was someone who wasn’t just kind to me, but who was willing to engage with me on a philosophical level. He saw my humanity. And he genuinely wanted to spend time with me.

Amanda Knox was a student in Perugia studying abroad when her roommate, Meredith Kercher, was found stabbed to death in 2007. (Franco Origlia/Getty Images)

“He spoke to me in terms of his ideology and his faith, but there were truths in what he said,” she shared. “It would shift my perspective from one of utter despair to one of hope. And on days when I didn’t have hope, he showed me how to find value in the experience that I had. The idea that if you pray to God for strength, he doesn’t give you strength, he gives you an opportunity to be strong — that resonated with me.”

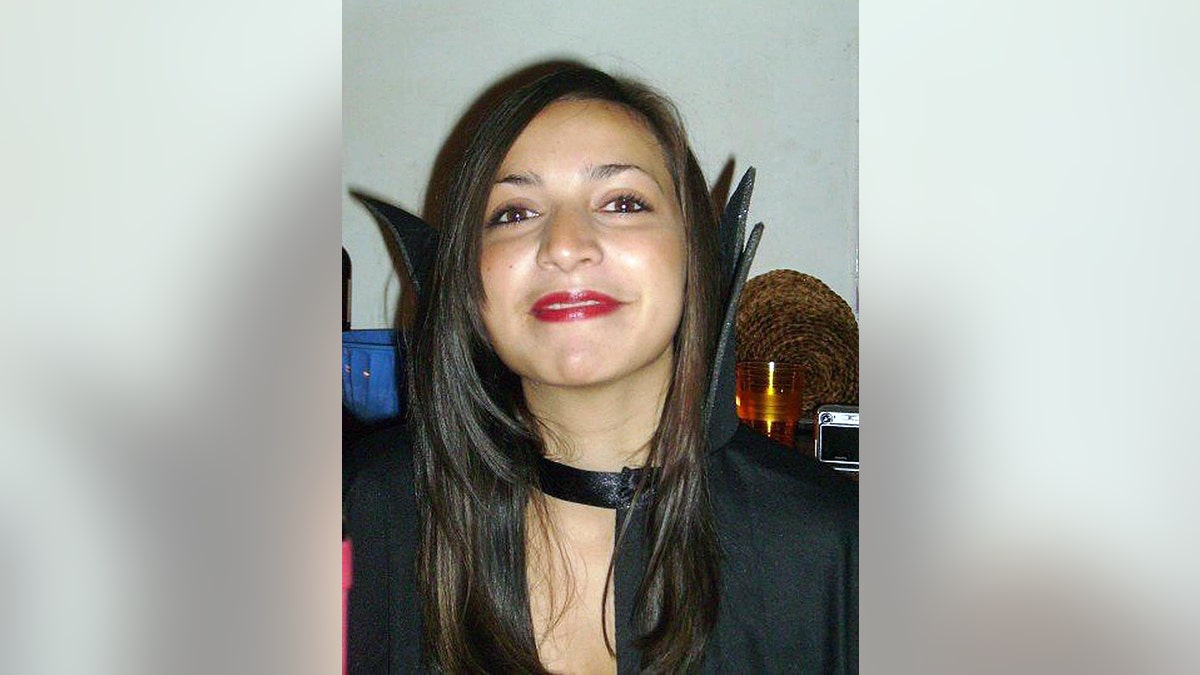

Knox was a 20-year-old student in Perugia studying abroad when her roommate, Meredith Kercher, was found stabbed to death in 2007. The 21-year-old was found in the cottage they shared with two Italian women.

British student Meredith Kercher was murdered in 2007. She was 21. (Franco Origlia/Getty Images)

The case made global headlines as suspicion fell quickly on Knox and her boyfriend of just days, Raffaele Sollecito.

Knox wrote that while she was in prison, a nun had approached her. But when Knox told her she wasn’t religious, the nun replied that she was “no better than an animal without God.”

Amanda Knox, left, and her boyfriend at the time, Raffaele Sollecito, of Italy, in 2007, outside the rented house where 21-year-old British student Meredith Kercher was found dead in Perugia, Italy. (File photo/ Associated Press)

The priest, on the other hand, suggested they could talk about whatever Knox wanted at his office.

SIGN UP TO GET TRUE CRIME NEWSLETTER

Amanda Knox is led away from Perugia’s Court of Appeal by police officers after the first session of her appeal of her murder conviction Nov. 24, 2010, in Perugia, Italy. (Franco Origlia/Getty Images)

“I don’t remember how he broke the ice,” Knox wrote. “By asking how I was doing? All I know is that I found myself gushing [in] desperation.”

Over the years, Amanda Knox has been attempting to clear her name. (Getty Images)

Knox also described how she would sing from her cell. Saulo, who overheard her one day, asked if she’d ever played instruments. When Knox told him that she used to play the guitar, he exclaimed, “I have a guitar!”

“You could play it during mass. You could even come to my office to practice,” he told her.

Amanda Knox’s book, “Free: My Search for Meaning,” is out now. (Grand Central Publishing)

Knox admitted she “didn’t love the idea of mass,” but the idea of leaving her cell to play the guitar was “one small link to the life I was living before this nightmare.”

“And so began our musical relationship,” Knox wrote. “Once or twice a week, I was allowed to spend an hour in Don Saulo’s office practicing hymns on the guitar, and then, during mass on Saturdays, I’d play and sing those religious tunes.”

Amanda Knox spent nearly four years behind bars in Italy. (Federico Zirilli/AFP via Getty Images)

She also described how Saulo had a small electronic keyboard and taught her to play the piano. And when he learned she loved studying languages, he began teaching her Latin phrases.

FOLLOW THE FOX TRUE CRIME TEAM ON X

Today, Amanda Knox is a married mother of two. (Lucien Knuteson)

Knox said Saulo’s kindness brightened her dark days. She told Fox News Digital standing up for yourself in prison meant violence.

“I think a lot of people might imagine just horrible things between inmates, and it’s true,” said Knox. “I was surrounded by women who were either struggling with mental illness, drug addiction or just general PTSD from long-term abuse and neglect.

Amanda Knox claimed the male guards tried to take advantage of her. (Claudia Greco/Reuters)

“There was a lot of dysfunction in the community of women that I belonged to. But, without a doubt, the worst experiences that I had were with the male guards, who had absolute power over me and who I could not protect myself from.

Amanda Knox was exonerated of the murder of Meredith Kercher in March 2015. (Oli Scarff/Getty Images)

“I was in a locked room with them, and they had the key,” Knox recalled. “If I ever spoke up, no one would believe me because [to them] I was the lying murdering whore.

“I was absolutely at the mercy of male guards who tried to take advantage of me … and it was just horrifying,” Knox claimed.

Amanda Knox said she never felt judged by Don Saulo. (Federico Zirilli/AFP)

In her book, Knox wrote that Saulo “never judged me, never told me who I was, even as the world called me a monster.”

GET REAL-TIME UPDATES DIRECTLY ON THE TRUE CRIME HUB

Don Saulo encouraged Amanda Knox to sing and play guitar. (Vincenzo Pinto/AFP via Getty Images)

“I felt supported by him in cultivating a mindset of compassion and empathy and gratitude, that it was this mindset that would allow me to understand what had happened to me,” she wrote.

One of the people she dedicated her book to was Saulo, “for holding my hand when no one else could.”

One of the people Amanda Knox dedicated her book to was Don Saulo. (Oli Scarff/Getty Images)

“I remain an atheist, but Don Saulo taught me to value much of the wisdom in the teachings of Jesus,” she wrote. “Turning the other cheek, the golden rule, a radical refusal of judgment, an acceptance of all people – high and low, sinner and saints. No one deserves God’s grace, and yet, it is there for everyone. This is how I think about compassion. It is not kindness if it is reserved for the just, the good, the kind.”

Rudy Guede was eventually convicted of murdering Meredith Kercher. (Tiziana Fabi/AFP/Getty Images)

Rudy Hermann Guede of the Ivory Coast was eventually convicted of murder after his DNA was found at the crime scene. The European Court of Human Rights ordered Italy to pay Knox damages for the police failures, noting she was vulnerable as a foreign student not fluent in Italian.

Knox returned to the United States in 2011 after being freed by an appeals court in Perugia and has established herself as a global campaigner for the wrongly convicted. Over the years, she has attempted to clear her name.

Amanda Knox’s parents are seen here speaking to the press. (Tiziana Fabi/AFP via Getty Images)

Today, Knox is a board member of The Innocence Center, a nonprofit law firm that aims to free innocent people from prison. She also frequently discusses how high-profile cases affect loved ones on a podcast she hosts with her husband, “Labyrinths.”

CLICK HERE TO GET THE FOX NEWS APP

Amanda Knox arrives with her husband Christopher Robinson (left) at the courthouse in Florence June 5, 2024, before a hearing in a slander case related to her jailing and later acquittal for the murder of her British roommate. (Tiziana Fabi/AFP via Getty Images)

Guede, 37, was freed in 2021 after serving most of his 16-year sentence.

Knox told Fox News Digital she was “haunted” by the spirit of Kercher.

A view of the house that was the site of the Nov. 1, 2007, murder of British student Meredith Kercher in Perugia, Italy. (Franco Origlia/Getty Images)

“I think about her every day, especially when I consider what could have happened to me,” she explained. “My fate very well could have been hers, and her fate could very well have been mine. We were both two young women who went to study abroad. Our lives were ahead of us. Everything was going well for us. And then a man broke into our home and killed her.”

A floral tribute with photographs of Meredith Kercher at her funeral Dec. 14, 2007, at Croydon Parish Church, South London. (Peter Macdiarmid/Getty Images)

“If it hadn’t been for the fact that I just happened to meet a young, kind man five days before the crime occurred, I would very well be dead too now,” she continued.

“When I think about her … [I have] just the utter realization of the fragility, the impermanence and preciousness of life. What a privilege it is to live. And how important it is of a task to fight for your life and to make it worth living while you have it. I think about that.

Relatives of murdered British exchange student Meredith Kercher — Stephanie Kercher (right), Arline Kercher (left) and father John Kercher (center) — arrive for a news conference in Perugia Nov. 6, 2007. (STR/AFP via Getty Images)

“One of the biggest things that I’ve had to struggle with is unpacking the fact that a friend of mine’s death is wrapped up in my identity. … My identity is twisted up in [her family’s] deepest pain. The truth of what happened to her and the justice that was denied to her is an ongoing, painful thing for me and many others. When people say, ‘Meredith has been lost in this story,’ they’re not wrong.”

AMANDA KNOX’S ADVICE FOR AMERICAN LINKED TO PUNTA CANA MISSING PERSONS CASE

Amanda Knox told Fox News Digital she hopes to connect with Meredith Kercher’s family someday. (STR/AFP via Getty Images)

Knox said she tried reaching out to Kercher’s family “a bit ago,” but has gotten “radio silence.” Fox News Digital reached out to Kercher’s family for comment.

“I just wish … they would connect with me so that we can grieve together and try to make meaning out of this tragedy together,” said Knox.

Amanda Knox was a guest on the TV program “Cinque Minuti” in Rome June 10, 2024. (Massimo Di Vita/Archivio Massimo Di Vita/Mondadori Portfolio via Getty Images)

Knox knows she can never return to her old life. But she hopes, after telling her story, she can move forward with her family. That, she said, gives her hope today.

Amanda Knox acknowledges the cheers of supporters while her mother, Edda Mellas, comforts her Oct. 4, 2011, in Seattle. (Stephen Brashear/Getty Images)

“There’s never going to be a day when every single person in the world is going to realize that I’ve been wrong and harmed,” said Knox. “I have to then ask myself, ‘Can I live with that? What can freedom mean to me today?’

“I think that has been a really important shift in my perspective that I try to convey in the book, going from feeling that I am trapped in my own life … to feeling like I can push forward. It’s allowing me to feel like I can make choices again in light of all this backstory. That gives me momentum.”

The Associated Press contributed to this report.

Comments are closed.